DiCOSApps

DiCOSApp Introduction

DiCOSApp is web-based SaaS cloud computing service. In this document, we will introduce how to access the service and the computing resources its provides.

Use DiCOSApp

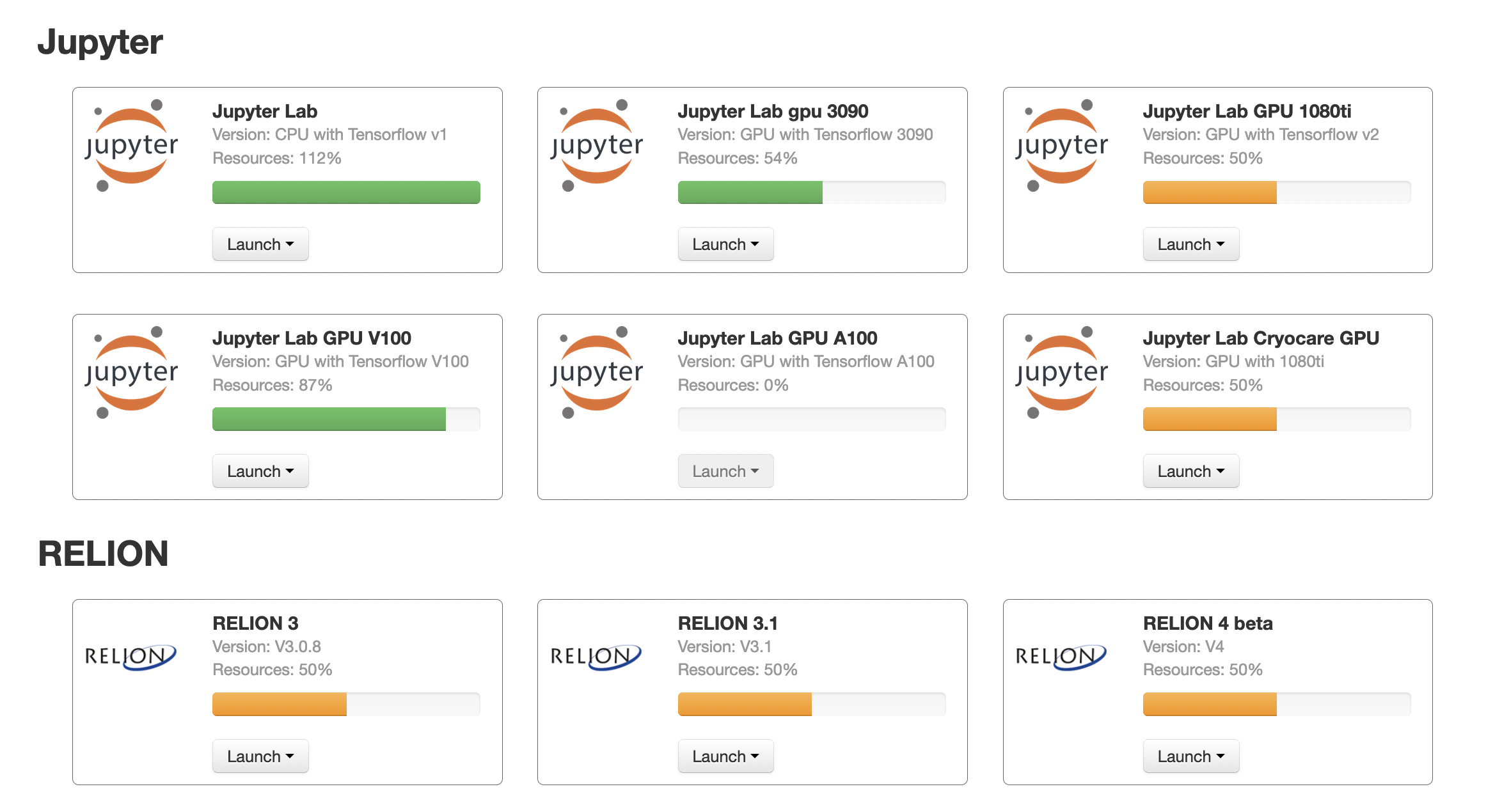

Login in to DiCOS web and go to https://dicos.grid.sinica.edu.tw/dockerapps/ to start DiCOSApps. Each DiCOSApp shows its software and the availability of its computing resources as the image below.

Press launch button (and choose proper lifetime of the application if applicable) to start the computing instance.

CryoEM Specific APPs

APP name |

CPU cores |

GPU |

Memory |

SSD |

|---|---|---|---|---|

CisTEM |

32 |

0 |

100GB |

no |

RELION3 |

16 |

4 (1080Ti) |

380GB |

yes |

RELION2 |

16 |

4 (1080Ti) |

380GB |

yes |

CisTEM-cluster |

40 per job, max to 600 |

0 |

256GB ~ 3840GB |

no |

CryoSPARC(no longer up2date) |

16 |

1 (1080Ti) |

380GB |

yes |

Jupyter Notebooks

APP name |

CPU coures |

GPU |

Memory |

SSD |

|---|---|---|---|---|

JupyterLab(CPU) |

12 |

0 |

64GB |

yes |

JupyterLab(GPU) |

12 |

1 (P100) |

128GB |

yes |

RELION Job Configuration

What’s the ideal MPI number and thread number with current RELION APP?

4 MPI with 4 threads. If it’s GPU accelerated process, please use 5 MPI with 4 threads

What if RELION encounters error?

RELION is often stable. When error occurs, it would usually caused by:

Memory or resources are not enough. For such error, simply reducing Thread number would just help. If the error lasts, then try reducing MPI number.

Input file error. Checking log and removing problematic file indicated in log may just solve this issue.

DiCOSApp Data Handling

Every user has their home space and each group has a group space.

You can download and access your data via the endpoints,

slurm-ui.twgrid.organddicos-sftp.twgrid.orgby sftp client(like FileZilla).All the space can be accessed in your DiCOSApp instance as well.

Please check more information as below:

1. User Home space

The user home space is in following paths:

- ~/data/

- $HOME/data

- /dicos_ui_home/<user_name>/data

Please make a remote backup periodically to secure it

2. Group space

The group space is shared among group members and PI has full access rights and responsibility to manage it.

This space is served as the computing working directory for your jobs.

Please find the space as below:

- /ceph/work/{group_name}

Please make a remote backup your data periodically to secure it

4. CryoEM group space (CryoEM user only)

CryoEM user & group space is in the following path:

- /activeEM/

Data Transfer Endpoint

Please transfer your data by

dicos-sftp.twgrid.org``(recommended) or ``slurm-ui.twgrid.orgendpoint.( using filezilla or sftp client):

- dicos-sftp.twgrid.org

- slurm-ui.twgrid.org

Restore your DiCOSApp Instance

Your DiCOSApp instance might be lost when our service experiences accidental or scheduled downtime. Please restore your DiCOSApp instance by following the instructions.

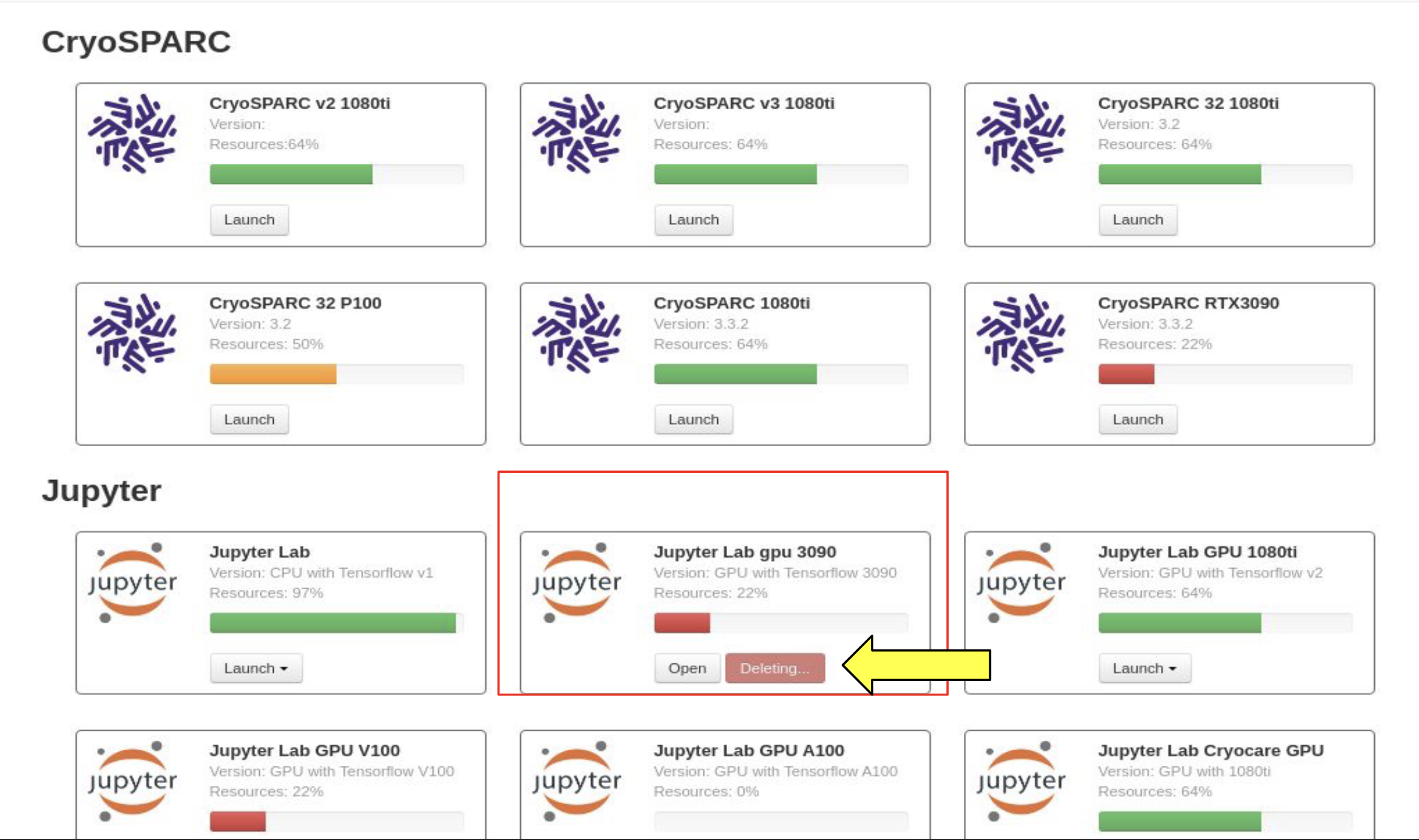

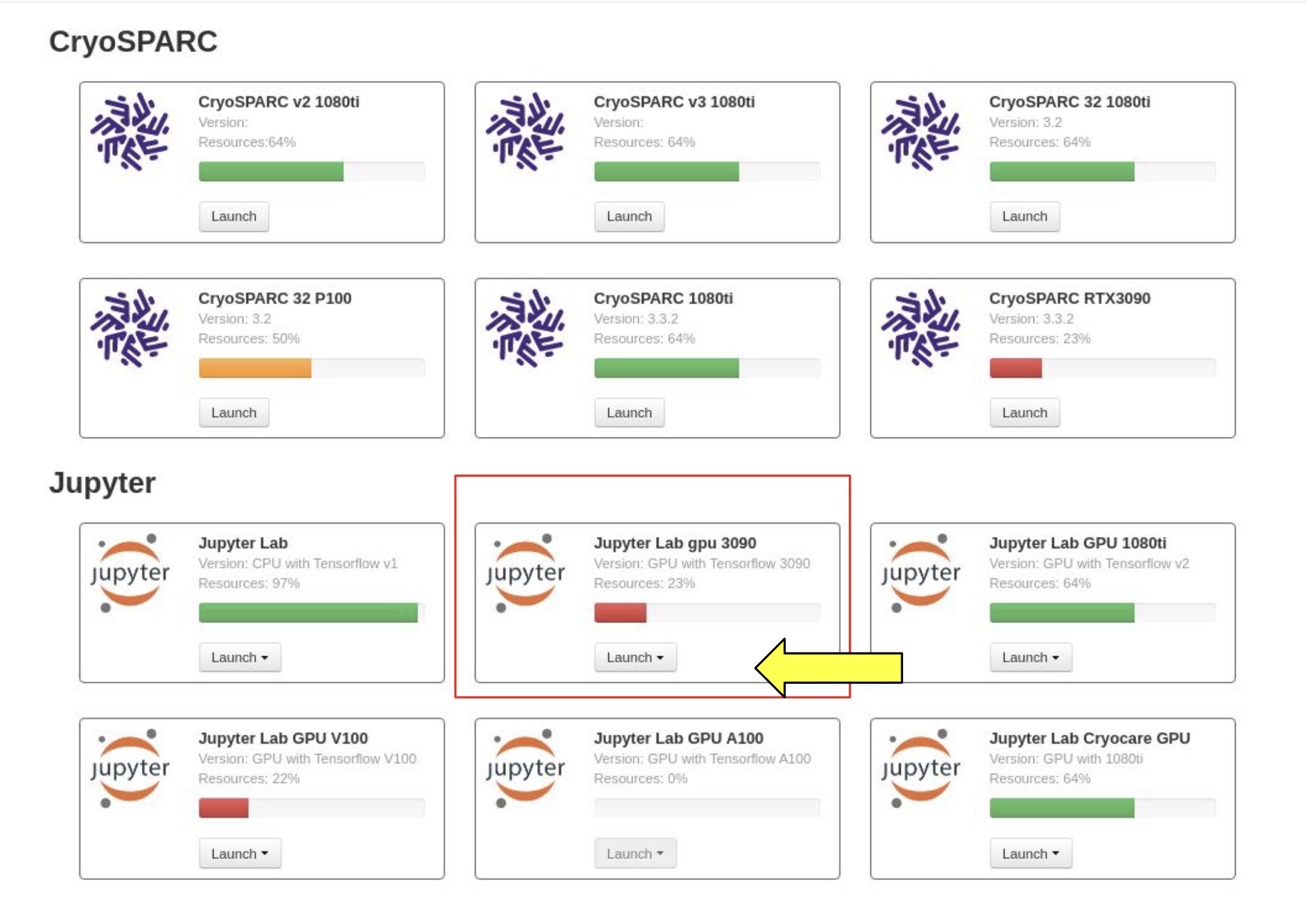

Go to DiCOSApp page to see if there are any open DiCOSApp(s).

Click Delete button to delete the opened DiCOSApp(s).

Launch a new DiCOSApp

Specific Software Installation

If you have special requirement for the application installation, please contact to DiCOS-Support@twgrid.org.